Right-Sizing AWS Lambda Memory: Step-by-Step Guide

Learn how to optimise AWS Lambda memory settings for better performance and reduced costs with this step-by-step guide tailored for UK SMBs.

Want to save money and boost performance on AWS Lambda? Choosing the right memory size is crucial. Allocate too much, and you overspend. Allocate too little, and your functions slow down or even fail. Here's what you need to know:

- Memory and CPU Scale Together: Higher memory means faster execution due to increased CPU power.

- Cost vs. Performance: Surprisingly, more memory can sometimes lower costs by reducing execution time.

- Example: A 1536 MB configuration costs less than 512 MB for the same workload because it runs faster.

- Key Metrics to Track: Use Amazon CloudWatch to monitor memory usage, execution time, and billed duration.

- Tools to Optimise:

- AWS Lambda Power Tuning: Tests memory settings for cost-performance balance.

- AWS Compute Optimizer: Offers memory recommendations based on usage data.

Quick Tip

Start small. Gradually adjust memory settings and monitor performance. Use tools like CloudWatch and Power Tuning to find the sweet spot where performance meets cost efficiency. If you're a UK-based business, remember to factor in compliance with local GDPR standards and cost savings from AWS Graviton2 processors.

For UK SMBs, optimising Lambda memory can mean lower monthly bills and faster applications. Let’s dive into how to do it step by step.

Optimize Your AWS Lambda Function With Power Tuning

How AWS Lambda Memory Allocation Works

Understanding how AWS Lambda allocates memory is essential for fine-tuning your functions, especially when balancing cost and performance. Unlike traditional server setups, Lambda’s memory configuration doesn’t just dictate how much RAM your function gets - it also determines the CPU power available. In other words, memory allocation directly influences your function’s performance.

You can set memory for a Lambda function anywhere from 128 MB to 10,240 MB, adjusting in 1-MB increments. This flexibility allows precise resource control, provided you understand how it affects both performance and costs.

How Memory Impacts CPU Power

One of the key aspects of AWS Lambda is that CPU power scales with memory. For instance, a function configured with 256 MB of memory will have roughly double the CPU capacity of one set at 128 MB. This scaling continues until you hit 1,769 MB, at which point your function gets the equivalent of a full vCPU.

Memory and Performance Dynamics

The link between memory and CPU creates some interesting performance outcomes. For example, in one test, a Lambda function with 128 MB of memory took 900 ms to complete. Increasing the memory to 512 MB cut the execution time to 400 ms, while setting it to 1024 MB reduced it further to just 200 ms.

These improvements aren’t just about having more RAM - the additional CPU power plays a big role, especially for compute-heavy tasks, file processing, and even reducing cold start times. However, there’s a trade-off: while higher memory settings can significantly speed up execution, they also increase the cost per second of running the function. So, even though execution times drop, the overall cost might not always decrease.

Choosing Low or High Memory Settings

For lightweight tasks like basic API handling or simple data transformations, lower memory settings (128–512 MB) are often sufficient. The default 128 MB is perfect for straightforward operations such as routing events between AWS services.

On the other hand, higher memory settings (1024 MB and above) are better suited for demanding workloads. Functions that deal with large files, complex calculations, or extensive libraries benefit from the extra CPU power. Similarly, tasks involving services like Amazon S3 or Amazon EFS, especially when handling large datasets, often see noticeable performance improvements with more memory.

It’s worth noting that CPU-bound functions - those limited by processing power - experience the most significant gains from increased memory. In contrast, network-bound functions, which rely more on external data transfers, see less of a performance boost.

To make the most of these settings, use Amazon CloudWatch to monitor metrics like memory usage and execution time. Analysing this data can help you decide whether tweaking memory allocation could further optimise performance without driving up costs unnecessarily. Next, we’ll explore how to apply these principles in a step-by-step guide to right-sizing your memory settings.

Step-by-Step Guide to Right-Sizing AWS Lambda Memory

Now that you know how memory allocation impacts performance, let’s dive into the steps to fine-tune your Lambda functions. It all starts with understanding your function’s specific needs.

Step 1: Review Function Requirements and Workloads

Begin by outlining your function’s purpose, workload patterns, and performance expectations.

Take a close look at the type of workload your function handles. For example, CPU-heavy tasks will benefit from higher memory allocations since CPU power increases with more memory. On the other hand, network-bound functions may not see much improvement from additional memory.

Consider the performance priorities of your function. For instance, customer-facing APIs that need sub-second response times will have different requirements compared to background jobs that can tolerate slower execution. Also, document key details such as how often the function runs, the size of payloads, and any seasonal usage spikes.

Step 2: Track Performance Metrics in CloudWatch

Once you’ve clarified your function’s requirements, use CloudWatch to collect and monitor performance data. Pay close attention to the Max Memory Used metric, which shows how much memory your function actually consumes during execution. Comparing this with your allocated memory size will reveal if you’re over-provisioning (and wasting money) or under-provisioning (and risking performance issues).

"Performance testing your Lambda function is a crucial part in ensuring you pick the optimum memory size configuration. Any increase in memory size triggers an equivalent increase in CPU available to your function." - AWS Lambda Documentation

Other key metrics to track include execution duration and memory usage trends. Lambda sends metric data to CloudWatch every minute, giving you regular updates. Setting up alarms for memory usage thresholds can also help you catch potential issues before they escalate.

Here are the most useful metrics for memory optimisation:

| Metric Name | Description |

|---|---|

| Duration | Time your function spends processing an event (in milliseconds) |

| Memory Size | Amount of memory allocated to your Lambda function |

| Max Memory Used | Peak memory usage during execution |

| Init Duration | Time taken to initialise the runtime environment before handler execution |

| Billed Duration | Actual billable execution time (in milliseconds) |

Look at trends over a week or more to get a broader picture of resource usage instead of relying on one-off executions.

Step 3: Adjust Memory Settings Gradually

Using the data you’ve gathered, start tweaking memory settings step by step. Avoid making large changes all at once, as small adjustments provide a better path to finding the right balance.

For example, if your function typically uses 200 MB but is set at 512 MB, try lowering it to 384 MB rather than cutting it in half. Similarly, if your function is struggling with memory, increase it by 25–50% instead of doubling the allocation.

You can make these changes through the AWS Console or by updating your infrastructure code. After each adjustment, monitor the function for at least a week to collect enough data before making further changes.

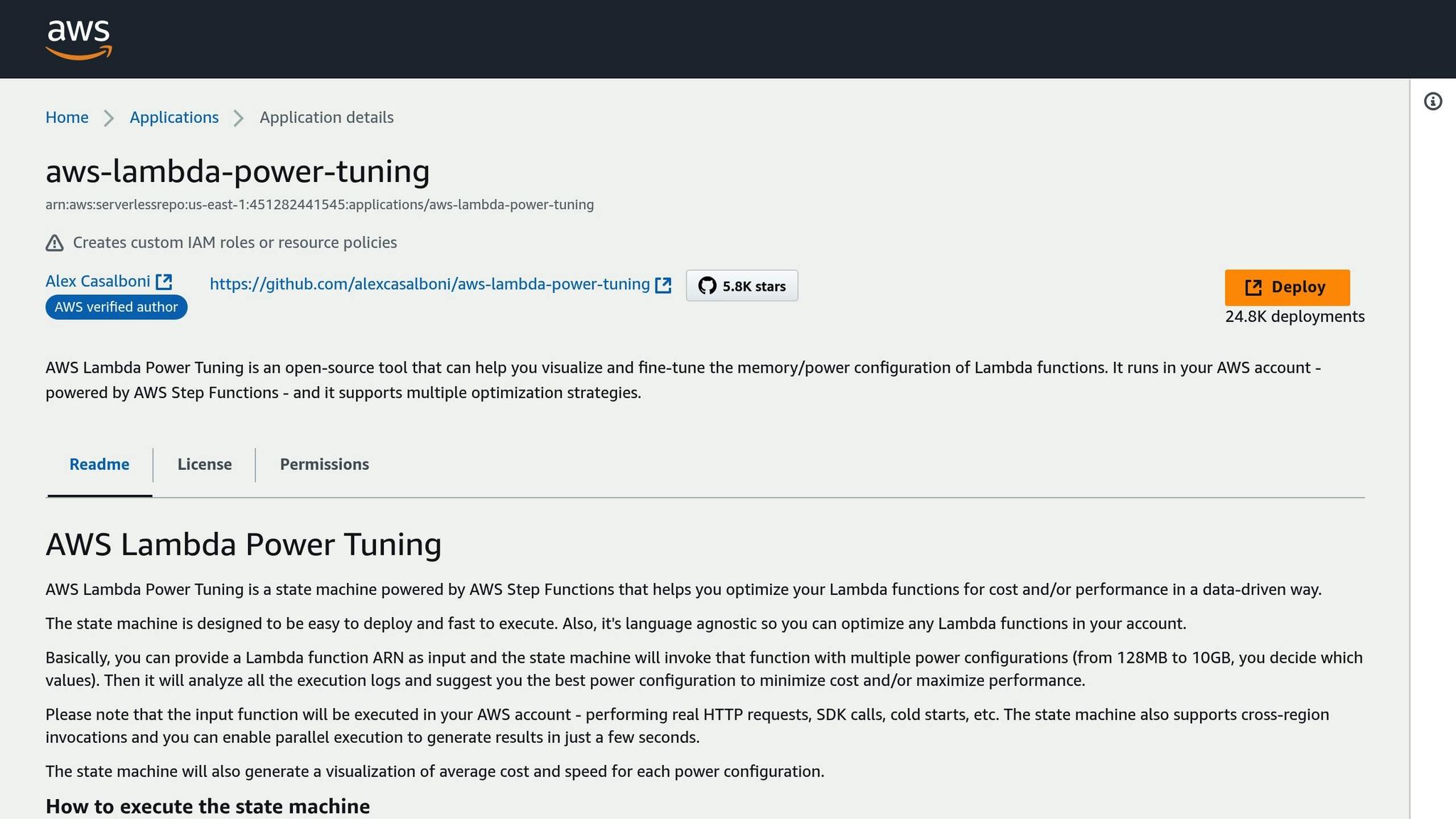

Step 4: Use AWS Lambda Power Tuning

For a more systematic approach, try the AWS Lambda Power Tuning tool. This open-source tool tests your function across multiple memory configurations, giving you a clear picture of how memory impacts both performance and cost.

"AWS Lambda Power Tuning is an open-source tool that addresses this problem by systematically finding the best memory configuration for a given Lambda function." - AWS Lambda Power Tuning Project

Set up the tool to test a range of memory allocations, including options both above and below your current setting. The tool generates visual charts that show the trade-offs between execution time and cost for each configuration. This makes it easier to choose the best setup based on your goals - whether it’s reducing costs, improving performance, or finding a middle ground.

For example, using AWS Lambda Power Tuning, a CPU-intensive function was found to perform most efficiently at 512 MB, offering the best balance between resource usage and cost per invocation.

Once you’ve identified the optimal memory setting, apply the changes and keep monitoring. Regular checks ensure your function stays efficient as workload patterns evolve.

Best Practices for Continuous Memory Optimisation

Optimising memory for AWS Lambda functions isn't a one-and-done task. As your functions evolve and workloads shift, regular monitoring and adjustments are essential to keep performance sharp and costs under control. Here are some practical steps to help you maintain efficient Lambda operations.

Set Up Regular Monitoring with CloudWatch and AWS Compute Optimizer

AWS Compute Optimizer is a powerful tool that uses machine learning to recommend memory configurations for Lambda functions, making it particularly useful for UK small and medium-sized businesses (SMBs) looking to balance cost and performance. By analysing 14 days' worth of CloudWatch data (with a minimum of 50 function runs during this period), it provides actionable insights to fine-tune memory allocation.

To start, enable AWS Compute Optimizer through the AWS Console. The service works seamlessly with CloudWatch, automatically pulling memory usage data. For deeper insights, CloudWatch Lambda Insights can monitor memory usage metrics, though it requires installing a Lambda extension, which adds to your monthly expenses. If you're on a tighter budget, CloudWatch Logs Insights offers a cost-effective alternative. You can create custom queries to track memory usage trends and identify inefficiencies.

Set up CloudWatch alarms to flag potential issues before they escalate. For example, you might monitor when memory usage exceeds 80% of the allocated limit, identify sudden spikes in execution time, or track unusual invocation patterns. These alerts can help you address problems proactively, safeguarding both performance and customer experience.

It's worth noting that AWS Compute Optimizer currently supports only x86_64 architecture. For ARM-based functions, manual monitoring techniques will be necessary.

Add Memory Reviews to CI/CD Pipelines

Embedding memory reviews into your CI/CD pipelines ensures that optimisation happens as part of your development workflow. This approach prevents performance from drifting over time and keeps your functions operating efficiently.

Automate memory checks as part of your deployment process. For instance, you can include commands to review memory usage and performance metrics directly within your pipeline scripts. Parsing configuration files like serverless.yml or CloudFormation templates can help automate the generation of test payloads, ensuring your memory tuning reflects real-world usage scenarios.

Instead of directly updating functions, consider creating pull requests for memory adjustments. This keeps the process transparent, allows for team feedback, and maintains high code review standards. For organisations managing multiple Lambda projects, developing a unified CLI tool with pre-configured build scripts and CI/CD templates can save time and ensure consistent optimisation practices across teams.

Reduce Code and Dependencies

For UK SMBs aiming to cut costs and boost performance, keeping your code lean and limiting dependencies is crucial. Streamlining your codebase can significantly reduce memory usage and improve execution times.

- Trim unnecessary dependencies: Remove libraries that aren't essential to your function's execution.

- Import selectively: Instead of loading entire SDKs, import only the modules you need to minimise cold start delays.

- Use global variables and singletons: Reuse established connections until the container lifecycle ends, reducing overhead.

- Optimise Java and Node.js functions: For Java, store dependency

.jarfiles in a separate/libdirectory to speed up cold starts. For Node.js, apply techniques like code minification to shrink the package size.

Breaking down monolithic functions into smaller, task-specific ones can also improve efficiency. Each function can be tailored to its workload, making resource allocation more precise. Additionally, techniques like lazy loading variables and limiting static initialisations can further boost performance.

Regularly auditing your dependencies is another effective way to keep memory usage in check. Tools like npm audit for Node.js and similar plugins for other runtimes can help you identify outdated or unused packages that unnecessarily bloat your functions.

Cost and Performance Impact for UK SMBs

For small and medium-sized businesses (SMBs) in the UK, managing AWS Lambda costs while ensuring optimal performance is a balancing act. One critical factor is memory allocation, as it directly affects both performance and expenses. Fine-tuning this balance can make a noticeable difference to monthly AWS bills, helping businesses grow sustainably.

How AWS Lambda Billing Works

To manage costs effectively, it’s important to understand how AWS Lambda pricing works. The billing model is straightforward, based on the number of requests and the execution time. Duration costs are tied to the amount of memory allocated to your function and are measured from the moment the code starts running until it finishes, rounded up to the nearest millisecond. AWS charges for the total gigabyte-seconds your function uses. This means that a higher-memory function with a shorter runtime might cost about the same as a lower-memory function running for a longer period.

Here’s an example to clarify: a mobile app backend that processes three million requests a month with 1,536 MB of memory on an x86 architecture would cost approximately £2.11 per month.

The AWS Lambda free tier provides one million free requests and 400,000 GB-seconds of compute time each month. This often covers the costs of development and testing phases. Additionally, functions running on Graviton2 processors can offer up to 34% better price performance compared to x86 processors, making them a smart choice for businesses handling large data volumes. These cost-saving options are especially valuable for meeting financial and compliance standards specific to the UK.

UK-Specific Costs and Compliance Requirements

UK businesses need to report AWS costs in GBP and use the DD/MM/YYYY date format for billing. Regularly reviewing memory allocation is a practical way to align AWS usage with UK standards while keeping costs manageable.

Compliance is another key factor. Post-Brexit, the UK GDPR remains the primary data protection framework. This requires organisations to implement measures that ensure the confidentiality, integrity, and availability of systems handling personal data. For Lambda functions processing sensitive data, memory and performance settings should be aligned with the Data Protection Act 2018. This involves conducting risk assessments, maintaining backups, and ensuring audit trails meet UK standards.

Incorporating compliance checks into your optimisation process not only helps with regulatory adherence but also boosts performance. For example, setting up monthly cost dashboards in GBP and enabling alerts can help monitor expenses. Using Compute Savings Plans could further reduce costs by up to 17%. Regular security assessments, as recommended by the Information Commissioner’s Office (ICO), should include reviews of Lambda configurations. Documenting memory settings and their impact on data processing speed and security - using UK-specific formats - can streamline compliance audits.

For more tips on managing AWS costs and performance tailored to small and medium-sized businesses, check out AWS Optimization Tips, Costs & Best Practices for Small and Medium sized businesses.

Conclusion: Key Points for AWS Lambda Memory Optimisation

For UK small and medium-sized businesses (SMBs), fine-tuning AWS Lambda memory settings is a smart way to manage both performance and costs. One key point to remember: memory and CPU are directly connected. For example, at 1,769 MB of memory, a function gains the equivalent of one vCPU. This link means allocating memory wisely can boost execution speed while keeping costs under control.

Keeping an eye on performance and making small, regular adjustments is equally important. Tools like Amazon CloudWatch allow you to monitor metrics and set alerts when memory usage gets close to its limit, helping you avoid slowdowns. Consider this - while a 128 MB function may take 1.5 seconds to cold start, a 1,024 MB function does the same in just 800 milliseconds.

AWS Lambda Power Tuning is another handy tool for comparing memory configurations. Pair it with AWS Compute Optimizer, which uses machine learning to provide tailored recommendations, and you can eliminate much of the guesswork. For businesses with frequent code updates, scheduling regular memory reviews is especially beneficial.

Cost savings don’t just stop at memory adjustments. Switching to Graviton2 processors, for instance, can improve price performance by up to 34% compared to x86 processors. Additionally, Compute Savings Plans offer discounted rates for Lambda usage, which can make a noticeable difference to monthly AWS bills for UK businesses.

To put this into perspective, increasing memory from 512 MB to 1,024 MB reduced execution time from 6 seconds to 2.5 seconds. This adjustment also lowered costs from £40.20 to £33.25 per million executions. It’s a clear example of how higher memory allocation can actually save money by speeding up execution times enough to offset the increased memory cost. These changes can be seamlessly integrated into deployment pipelines without disrupting workflows.

For UK SMBs, embedding memory optimisation into CI/CD pipelines and ensuring compliance with UK GDPR regulations helps maintain cost efficiency and legal alignment. By combining technical adjustments with solid business practices, businesses can achieve sustainable growth in a serverless computing environment.

FAQs

How does adjusting memory allocation in AWS Lambda impact performance and costs, and why can increasing memory sometimes save money?

When you increase memory allocation in AWS Lambda, you're not just getting more memory – you're also gaining extra CPU power. This can make a noticeable difference in execution speed, especially for tasks that demand a lot of resources. The faster your function runs, the less time it spends executing, which can help balance out the higher per-millisecond cost of using more memory.

In fact, choosing a higher memory setting often shortens the total execution time, which can actually lower overall costs, even if the memory price is higher. This is particularly useful for workloads involving heavy computations or those that need quicker processing. Striking the right balance between memory and CPU can help you optimise both performance and cost efficiently.

How can I monitor and optimise AWS Lambda memory settings to improve cost and performance?

To keep your AWS Lambda memory settings in check and running smoothly, leverage Amazon CloudWatch Lambda Insights along with CloudWatch metrics. These tools let you track essential performance indicators like memory usage, execution time, and cold starts. They offer a clear picture of how your functions are performing, making it easier to pinpoint inefficiencies.

On top of that, the AWS Compute Optimizer uses machine learning to suggest memory allocation adjustments. This helps you find the perfect balance between cutting costs and maintaining performance. By regularly reviewing these metrics and recommendations, you can make informed adjustments to ensure your Lambda functions remain efficient and budget-friendly.

How can UK small businesses optimise AWS Lambda functions for cost, performance, and compliance?

For small businesses in the UK, getting the most out of AWS Lambda functions means finding the right balance between cost, performance, and compliance. A good starting point is to determine the optimal memory allocation and processor type (x86 or ARM/Graviton). Tools like AWS Compute Optimizer or Lambda Power Tuning can help you fine-tune these settings to maximise performance while keeping costs low.

Boosting performance might involve load testing your functions to identify bottlenecks and considering ARM processors, which can often deliver better value for money. On the compliance side, it’s essential to ensure your setup adheres to UK data protection laws, such as GDPR. Also, keep an eye on data transfer expenses to avoid any unpleasant surprises.

To keep your costs under control, take advantage of AWS Cost Explorer. This tool can help you monitor spending and automate adjustments to resources, ensuring your setup stays efficient and aligned with your business needs.